Sounds like something your doctor should be vastly interested in for your recovery. But I'm sure your doctor will do nothing because following this up and acquiring it would amount to actual work. Would also help in objective gait analysis so correct rehab protocols can be assigned to correct your gait problems.

Egocentric vision-based detection of surfaces: towards context-aware free-living digital biomarkers for gait and fall risk assessment

Journal of NeuroEngineering and Rehabilitation volume 19, Article number: 79 (2022)

Abstract

Background

Falls in older adults are a critical public health problem. As a means to assess fall risks, free-living digital biomarkers (FLDBs), including spatiotemporal gait measures, drawn from wearable inertial measurement unit (IMU) data have been investigated to identify those at high risk. Although gait-related FLDBs can be impacted by intrinsic (e.g., gait impairment) and/or environmental (e.g., walking surfaces) factors, their respective impacts have not been differentiated by the majority of free-living fall risk assessment methods. This may lead to the ambiguous interpretation of the subsequent FLDBs, and therefore, less precise intervention strategies to prevent falls.

Methods

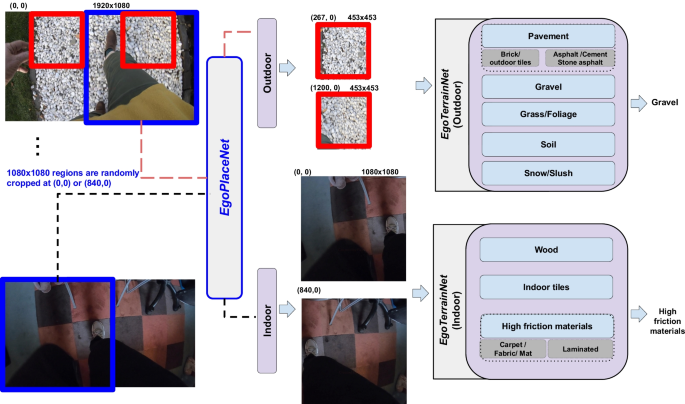

With the aim of improving the interpretability of gait-related FLDBs and investigating the impact of environment on older adults’ gait, a vision-based framework was proposed to automatically detect the most common level walking surfaces. Using a belt-mounted camera and IMUs worn by fallers and non-fallers (mean age 73.6 yrs), a unique dataset (i.e., Multimodal Ambulatory Gait and Fall Risk Assessment in the Wild (MAGFRA-W)) was acquired. The frames and image patches attributed to nine participants’ gait were annotated: (a) outdoor terrains: pavement (asphalt, cement, outdoor bricks/tiles), gravel, grass/foliage, soil, snow/slush; and (b) indoor terrains: high-friction materials (e.g., carpet, laminated floor), wood, and tiles. A series of ConvNets were developed: EgoPlaceNet categorizes frames into indoor and outdoor; and EgoTerrainNet (with outdoor and indoor versions) detects the enclosed terrain type in patches. To improve the framework’s generalizability, an independent training dataset with 9,424 samples was curated from different databases including GTOS and MINC-2500, and used for pretrained models’ (e.g., MobileNetV2) fine-tuning.

Results

EgoPlaceNet detected outdoor and indoor scenes in MAGFRA-W with 97.36

), which indicate the models’ high generalizabiliy.

Conclusions

Encouraging results suggest that the integration of wearable cameras and deep learning approaches can provide objective contextual information in an automated manner, towards context-aware FLDBs for gait and fall risk assessment in the wild.

Background

Falls in older adults (OAs,

of falls in OAs, respectively [3]. To assess the exposure to risk factors, fall risk assessment (FRA) methods have been developed, which informs selection and timing of interventions to prevent fall incidents. Commonly used clinician-administered tests in controlled conditions (e.g., Timed Up and Go [4]) can provide valuable insights on specific aspects of an OA’s intrinsic risk factors at discrete points in time. However, these in-lab/in-clinic approaches have exhibited a low-to-moderate performance in the identification of fall-prone individuals [5]. To address this limitation, recent attention has been focused on free-living FRAs using wearable inertial measurement units (IMUs) to assess OAs’ activities in their natural environments. Proposed free-living FRA approaches (e.g., 24 studies reviewed in [6]) have investigated relationships between falls and IMU-derived free-living digital biomarkers (FLDBs), primarily extracted from gait bouts [6]. Gait-related FLDBs include macro (e.g., quantity of daily: steps [7], missteps [8], and turns [9]) and micro (e.g., step asymmetry [7]) measures. Although these measures can be impacted by both intrinsic and environmental features [10,11,12], their respective impacts on FLDBs’ fall predictive powers have not been differentiated [6]. For instance, higher variability in acceleration signal (measured by the amplitude of the dominant frequency in the mediolateral direction, as a FLDB) during gait could indicate appropriate adaptation to the environment [13] (and potentially a lower risk of falls) and/or exhibit gait impairment (and potentially a higher risk of falls) [14]. Similarly, frequent missteps (as a FLDB) detected in free-living IMU data can be an indicator of impaired dynamic balance control (and a higher risk for falls [8]) and/or false alarms generated by anticipatory locomotion adjustment while walking on an irregular terrain (e.g., construction site) [15]. This ambiguity in interpretation leads to less precise intervention strategies to prevent falls.

A context-aware free-living FRA would elucidate the interplay between intrinsic and environmental risk factors and clarifies their respective impacts on fall predictive powers of FLDBs. This would subsequently enable clinicians to target more specific intervention strategies including environmental modification (e.g., securing carpets and eliminating tripping hazards) and/or rehabilitation interventions (e.g., training to negotiate stairs and transitions). Ideally, a context-aware free-living FRA method would be capable of examining the relationships between the frequency of falls, FLDBs, and different environmental fall-related features such as presence of dynamic obstacles (e.g., pedestrians, pets), unstable furniture, lighting condition, and terrain types. As a step towards this longer-term goal, the focus of the present study is to develop an automated method to differentiate between different walking surfaces commonly observed in everyday environments.

A wrist-mounted voice recorder was previously utilized to capture contextual information following misstep events (trips) [16], which could be limited to observations made by the user and may lack spatial and temporal resolution. To objectively identify terrain types, several studies examined the feasibility of using wearable IMU data recorded during gait [17,18,19]. For instance, machine learning models achieved 89

accuracy (10-fold cross-validation) to detect six different terrains including soil and concrete using two IMUs in [17]. These studies investigated datasets mostly sampled from young participants in controlled conditions (i.e., walking repetitively over a few surface types with constant properties), and primarily reported machine learning models’ holdout or k-fold cross-validation measures. However, cross-validation approaches such as leave-one-subject-out (LOSO) or models’ assessment using independent test and training datasets represent a more reliable picture of models’ robustness against inter-participant differences and generalizability to unseen data [20, 21]. Additional file 1: Preliminary results for IMU-based surface type identification reports the drastic difference between the k-fold and LOSO results of machine learning models implemented using the same IMU data (an open access dataset [22]) to differentiate between the walking patterns over stairs, gravel, grass, and flat/even surfaces.

Egocentric or first person vision (FPV) data recorded by wearable cameras affords the ability to provide rich contextual information more readily than IMU-based data alone. Additionally, while third-person vision data captured by ambient cameras (e.g., Microsoft Kinect) could provide valuable contextual information in an unobtrusive manner, they are restricted to fixed areas and can be challenged by multiple residents with similar characteristics [23]. In contrast, FPV data can be recorded in any environment with which the camera wearer is interacting, including outdoors [21]. In [24], seven days of data were collected from fallers and controls during daily activities using ankle-mounted IMUs and a neck-mounted camera. Subsequently, the frames attributed to walking bouts were investigated and annotated manually. The most frequent terrain type manually identified for all participants were outdoors on pavement, indoors on carpet and polished or hardwood flooring. Other terrain observations included grass, gravel, and multiple environments. However, the manual identification of walking surfaces, especially in large-scale free-living studies, is a laborious and inefficient process. To advance the field of free-living FRA and gait assessment, there exists a need to develop automated vision-based methods for terrain type specification.

Automated vision-based methods for terrain type identification have been investigated in other fields of assistive technology and robotics (mostly focused on outdoor terrain types [25,26,27]). For instance, in [28] head-mounted camera data were used for adaptive control of legged (humanoid) robot’s posture and dynamic stability on different terrains. Engineered features such as intensity level distribution, complex wavelet transform, and local binary pattern were extracted and a support vector machine model was developed to categorize 1,000 training images to three classes: (a) hard (e.g., tarmac, bricks, rough metal); (b) soft (e.g., grass, soil, gravels, snow, mud); and (c) unwalkable (static and moving obstructions). Although useful, this approach may not provide sufficient descriptive information to inform FRA. For instance, while snow, gravel and grass were considered into the same class, they would be expected to induce different patterns of gait. A relatively high accuracy of 82

was achieved when the model was applied to a 40-second video. However, this approach’s high computational cost was considered a limitation. Elsewhere, to control a powered prosthetic leg, a camera and IMU were mounted on the prosthetic and the relationship between image sharpness and acceleration was considered to trigger the camera [29]. Twenty minutes of data were collected from 5 able-bodied participants walking over 6 different types of terrain (asphalt, carpet, cobblestone, grass, mulch, and tile). Using a bag of word approach (SURF), an average classification accuracy of 86 was achieved based on 5-fold cross-validation. Deep learning approaches have shown strong potential to outperform engineered and bag-of-word-based approaches from many aspects, particularly inference time and accuracy [30, 31]. By integrating both order-less texture details and local spatial information, a Deep Encoding Pooling Network model was developed [32]. The model was trained on the images in Ground Terrain in Outdoor Scenes (GTOS) dataset [27], and tested on GTOS-mobile dataset. The former contains 30,000 images across 40 outdoor terrain classes captured by a camera mounted on a mobile exploration robot with a fixed distance between the camera and the ground. GTOS-mobile data was captured by a mobile phone and with more flexible viewpoint, still relatively close to the ground. Although promising results were achieved, due to low intra-class diversity, limited viewpoint, and restriction to outdoor terrains, the GTOS(-mobile) models may not be generalizable to address the problem of terrain identification in complex everyday environments. More relevant to the context of FRA, data of a chest-mounted camera and Gabor Barcodes [33] were used to automatically detect 17 environmental fall-related features such as slope changes (e.g., ramps) and surfaces (e.g., gravel, grass). Although high (88.5) accuracy was achieved, the incorporated dataset was restricted to young adults, limited to public environments lacking at-home data. Moreover, only k-fold cross-validation results were reported.

region for each frame cropped randomly either from right or left corner, the blue square) into indoor and outdoor, and b EgoTerrainNet, with Indoor and Outdoor versions, which classifies two 453453 (red squares) and 10801080 patches based on the enclosed terrain type

To address the previous research works’ limitations, this paper employs a unique dataset, i.e., Multimodal Ambulatory Gait and Fall Risk Assessment in the Wild (MAGFRA-W), collected from older non-fallers and fallers in out-of-lab conditions and presents a vision-based deep framework to classify level walking surfaces (see Fig. 1). To maximize the framework’s generalizability and minimize its dependence on sample size, an independent training dataset with high intra-class variance was formed by curating data from relevant datasets, such as GTOS ("Assessing and augmenting models' generalizability" Section). The curated dataset includes the following 8 classes (a) outdoor: pavement, grass/foliage, gravel, soil, and snow/slush and (b) indoor: high-friction materials, tiles, wood flooring. Subsequently, the framework’s generalizability to OA’s data and its robustness against inter-participant differences were assessed (e.g., using LOSO cross-validation). The proposed framework provides one of the first investigations into the contextualization of free-living gait and fall risk assessment in OAs.

No comments:

Post a Comment